Neural computations underlying cognition

How does our brain enable us to pay attention selectively to some things, and to ignore others? What happens in the brain when we make important decisions? Our research focuses on understanding the neural basis of cognitive phenomena such as selective attention and decision making. To address these questions, our group has developed quantitative approaches that combines neuroscience experiments, model-based analyses (e.g., signal detection theory, machine learning, dynamical systems) as well as computer simulations. Using cutting-edge, non-invasive techniques, including functional neuroimaging (fMRI), diffusion imaging (dMRI), high-density electroencephalography (EEG), and transcranial electrical and magnetic stimulation (tES/tMS), we directly measure or perturb brain activity in human subjects performing cognitively-demanding tasks. The overarching goal is to develop a unified framework that describes how cognitive phenomena emerge from neural computations by a systematic analysis of brain and behavior

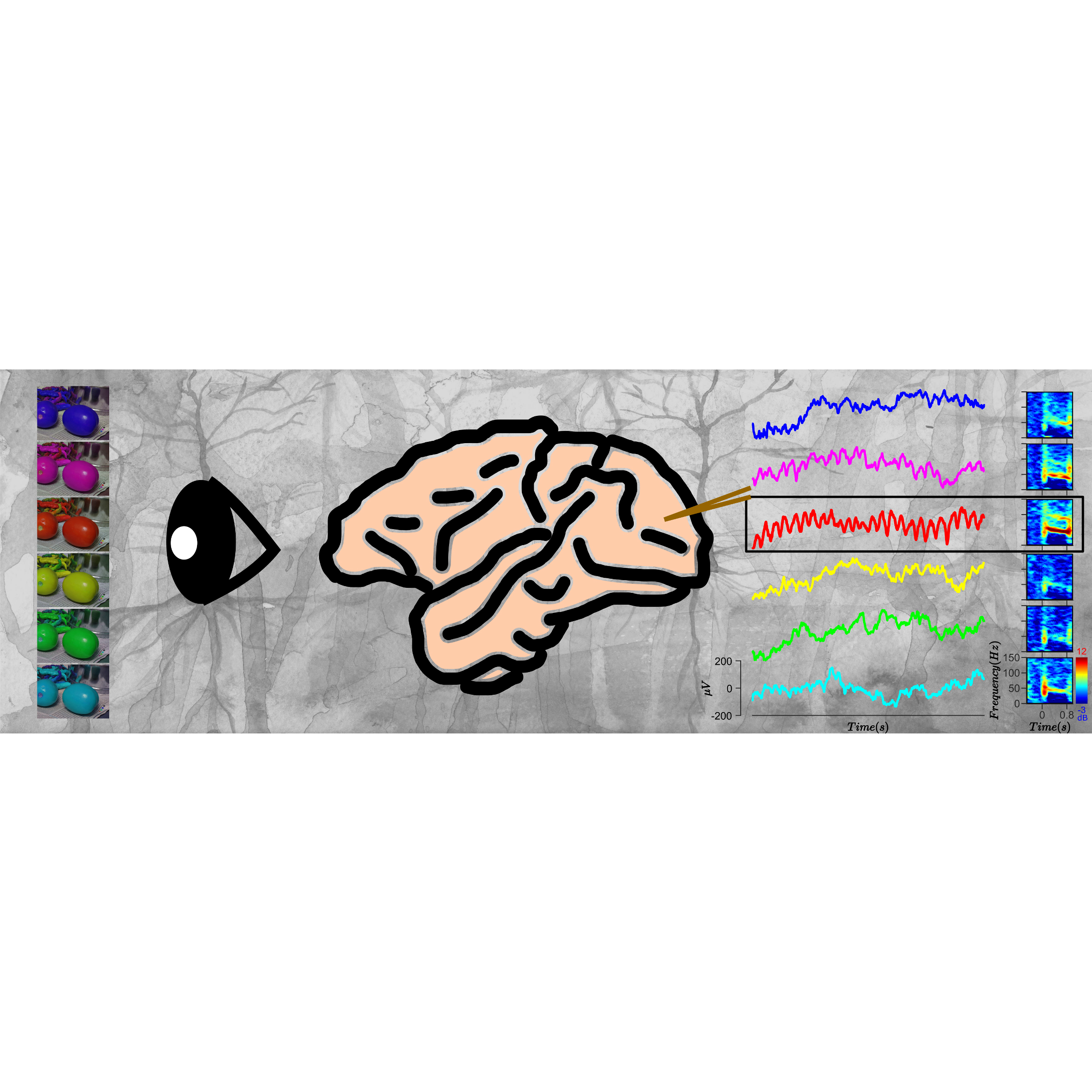

Red induces strong gamma oscillations in the brain

What changes inside the brain when one sees a colourful flower as opposed to a grayscale version of it? How do the brain signals change when one sees a green jackfruit versus a red tomato? Does the redness of the tomato matter? We studied such questions by recording signals from the primary visual cortex (an area of brain involved in visual processing) of monkeys while they were shown various natural images. To our surprise, we found that there were strong oscillations in the recorded signals at frequencies in the range 30-80 Hz whenever reddish images were shown. Oscillations in this range are traditionally known as gamma oscillations and have been previously linked to functions such as attention, working memory and meditation. To investigate this further, we presented uniform colour stimuli of different hues and found that gamma was indeed sensitive to the hue of the colour, with reddish hues generating the strongest gamma. In the visual cortex, gamma has been known to be induced strongly by gratings (alternating black and white stripes), but the gamma generated by colour stimuli was even stronger, almost 10-fold in some cases. The magnitude of gamma depended on the purity of the colour but not so much on the overall brightness. Importantly, it was related to a particular mechanism by which colour signals received by the retinal cone receptors are processed in the brain. These findings provide new insights about the generation of gamma oscillations and processing of colour in the brain

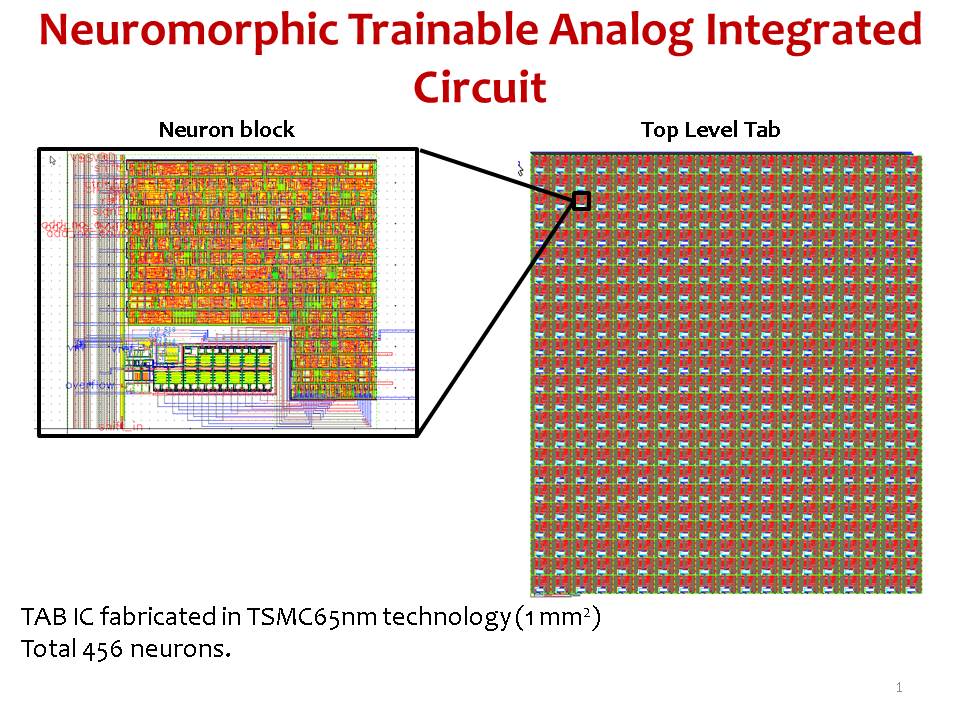

An Analogue Neuromorphic Co-Processor That Utilizes Device Mismatch for Learning Applications

As the integrated circuit (IC) technology advances into smaller nanometre feature sizes, a fixed-error noise known as device mismatch is introduced owing to the dissimilarity between transistors, and this degrades the accuracy of analog circuits. We present an analog co-processor that uses this fixed-pattern noise to its advantage to perform complex computa- tion. This circuit is an extension of our previously published train- able analogue block (TAB) framework and uses multiple inputs that substantially increase functionality. We present measure- ment results of our two-input analogue co-processor built using a 130-nm process technology and show its learning capabilities for regression and classification tasks. We also show that the co-processor, comprised of 100 neurons, is a low-power system with a power dissipation of only 1.1μW. The IC fabrication process contributes to randomness and variability in ICs, and we show that random device mismatch is favorable for the learning capability of our system as it causes variability among the neuronal tuning curves. The low-power capability of our framework makes it suitable for use in various battery-powered applications ranging from biomedical to military as a front-end analog co-processor

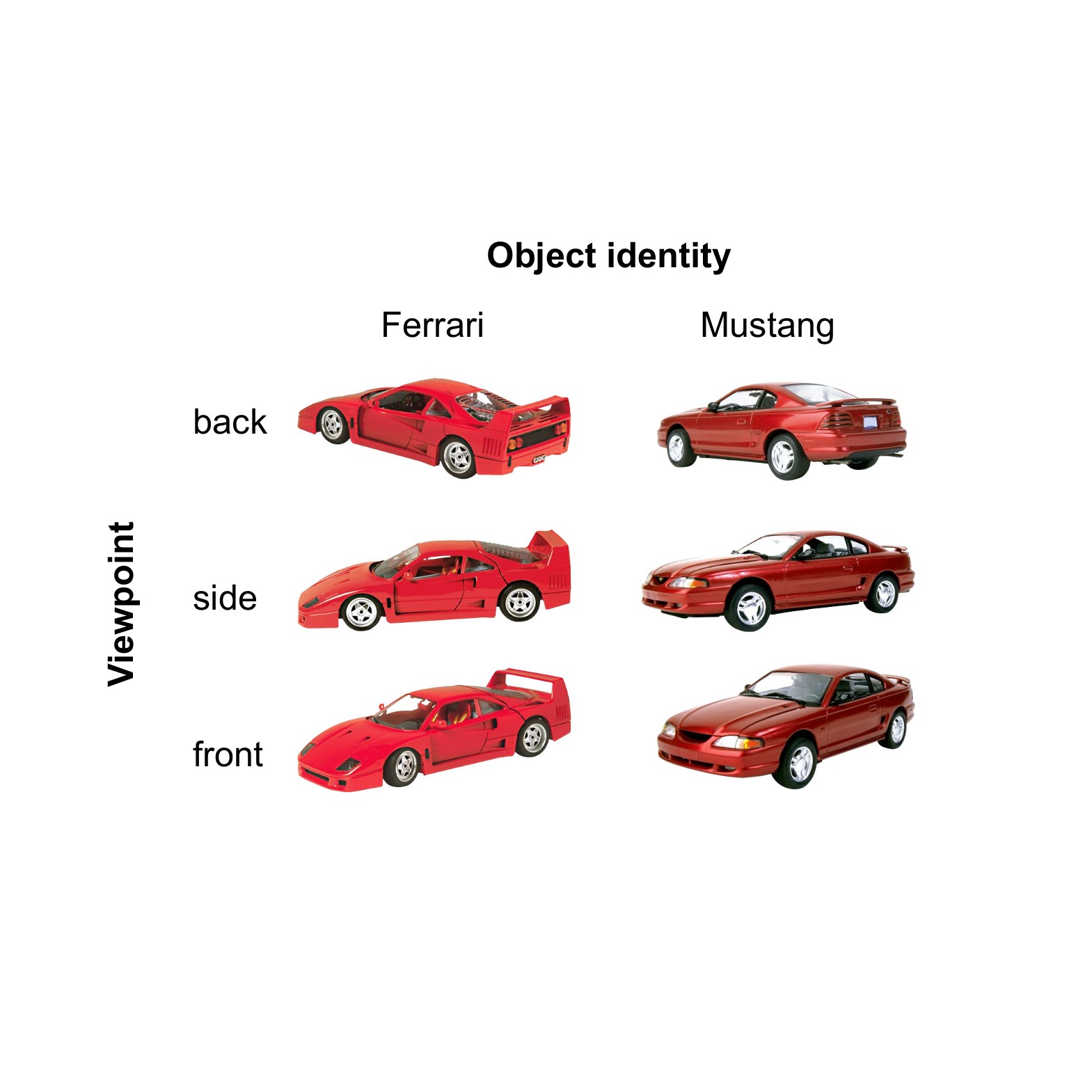

Multiplicative mixing of object identity and image attributes in single inferior temporal neurons

Knowing a Ferrari from a Mustang from an image can be hard because one has to detect their unique features while ignoring large image variations due to changes in view, size, position etc. Successful recognition requires both object identity and image attributes to be represented efficiently but precisely how the brain does it has been unclear. Recent work from our lab has shown that single neurons in high-level visual areas combine these two signals by multiplying rather than adding them, and that doing so enables efficent decoding of both signals

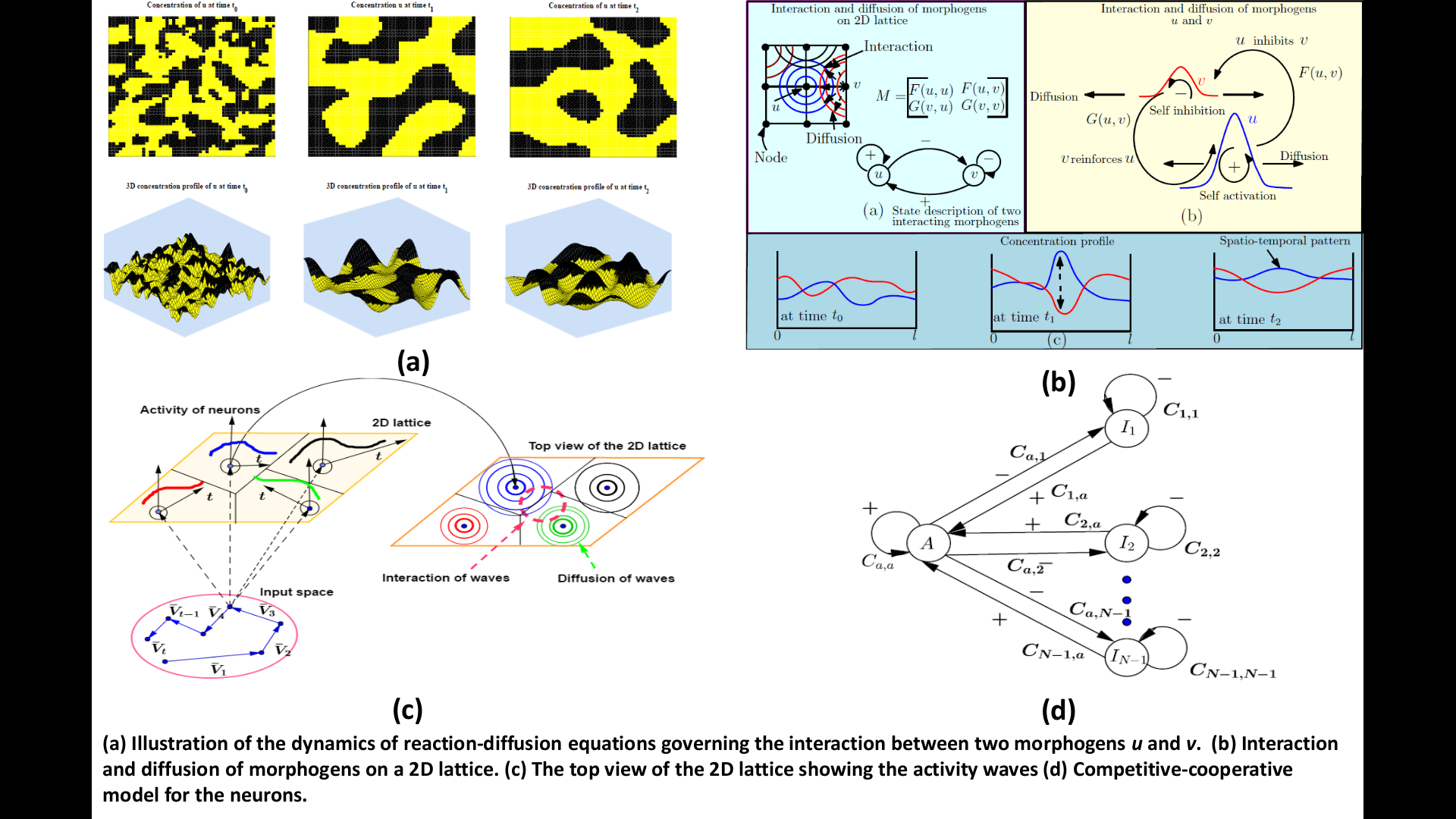

Temporal Self-Organization: A Reaction-diffusion Framework for Spatio-temporal Memories

Self-organizing maps find numerous applications in learning, clustering and recalling spatial input patterns. The traditional approach in learning spatio-temporal patterns is to incorporate time on the output space of a self-organizing map along with heuristic update rules that work well in practice. Inspired by the pioneering work of Alan Turing, who used reaction-diffusion equations to explain spatial pattern formation, we develop an analogous theoretical model for a spatio-temporal memory to learn and recall temporal patterns. The contribution of the paper is three-fold: (a) Using coupled reaction-diffusion equations, we develop a theory from first principles for constructing a spatio-temporal self-organizing map (STSOM) and derive an update rule for learning based on the gradient of a potential function. (b) We analyze the dynamics of our algorithm and derive conditions for optimally setting the model parameters. (c) We mathematically quantify the temporal plasticity effect observed during recall in response to the input dynamics. The simulation results show that the proposed algorithm outperforms the self-organizing maps with temporal activity diffusion (SOMTAD), neural gas with temporal activity diffusion (GASTAD) and spatio-temporal map formation based on a potential function (STMPF) in the presence of correlated noise for the same data set and similar training conditions